Alongside has large strategies to break unfavorable cycles before they turn professional, claimed Dr. Elsa Friis, a licensed psychologist for the firm, whose history consists of determining autism, ADHD and suicide danger making use of Huge Language Designs (LLMs).

The Along with app presently companions with more than 200 institutions throughout 19 states, and collects student chat information for their yearly youth psychological wellness report — not a peer examined publication. Their searchings for this year, said Friis, were surprising. With almost no mention of social networks or cyberbullying, the student users reported that their a lot of pressing concerns had to do with sensation overwhelmed, inadequate sleep routines and relationship issues.

Together with flaunts positive and insightful information points in their record and pilot research carried out earlier in 2025, yet professionals like Ryan McBain , a health and wellness researcher at the RAND Corporation, claimed that the data isn’t robust enough to comprehend the actual ramifications of these sorts of AI mental health and wellness tools.

“If you’re going to market a product to numerous children in teenage years throughout the United States with school systems, they need to fulfill some minimum typical in the context of actual extensive trials,” said McBain.

Yet below all of the report’s information, what does it really imply for pupils to have 24/ 7 access to a chatbot that is created to resolve their psychological wellness, social, and behavior worries?

What’s the difference between AI chatbots and AI companions?

AI buddies drop under the larger umbrella of AI chatbots. And while chatbots are becoming a growing number of advanced, AI friends are distinct in the manner ins which they engage with users. AI companions have a tendency to have less integrated guardrails, suggesting they are coded to constantly adapt to user input; AI chatbots on the various other hand could have more guardrails in position to keep a discussion on the right track or on subject. As an example, a troubleshooting chatbot for a food delivery firm has particular directions to bring on discussions that just relate to food distribution and application issues and isn’t made to wander off from the subject since it doesn’t recognize just how to.

Yet the line between AI chatbot and AI companion becomes obscured as increasingly more individuals are utilizing chatbots like ChatGPT as a psychological or healing appearing board The people-pleasing features of AI friends can and have actually become a growing concern of issue, especially when it concerns teens and other prone individuals that make use of these buddies to, at times, verify their suicidality , delusions and undesirable dependency on these AI companions.

A recent record from Common Sense Media broadened on the damaging effects that AI companion usage has on adolescents and teenagers. According to the report, AI platforms like Character.AI are “made to imitate humanlike interaction” in the type of “online pals, confidants, and even therapists.”

Although Sound judgment Media located that AI companions “present ‘unacceptable dangers’ for users under 18,” youngsters are still making use of these platforms at high prices.

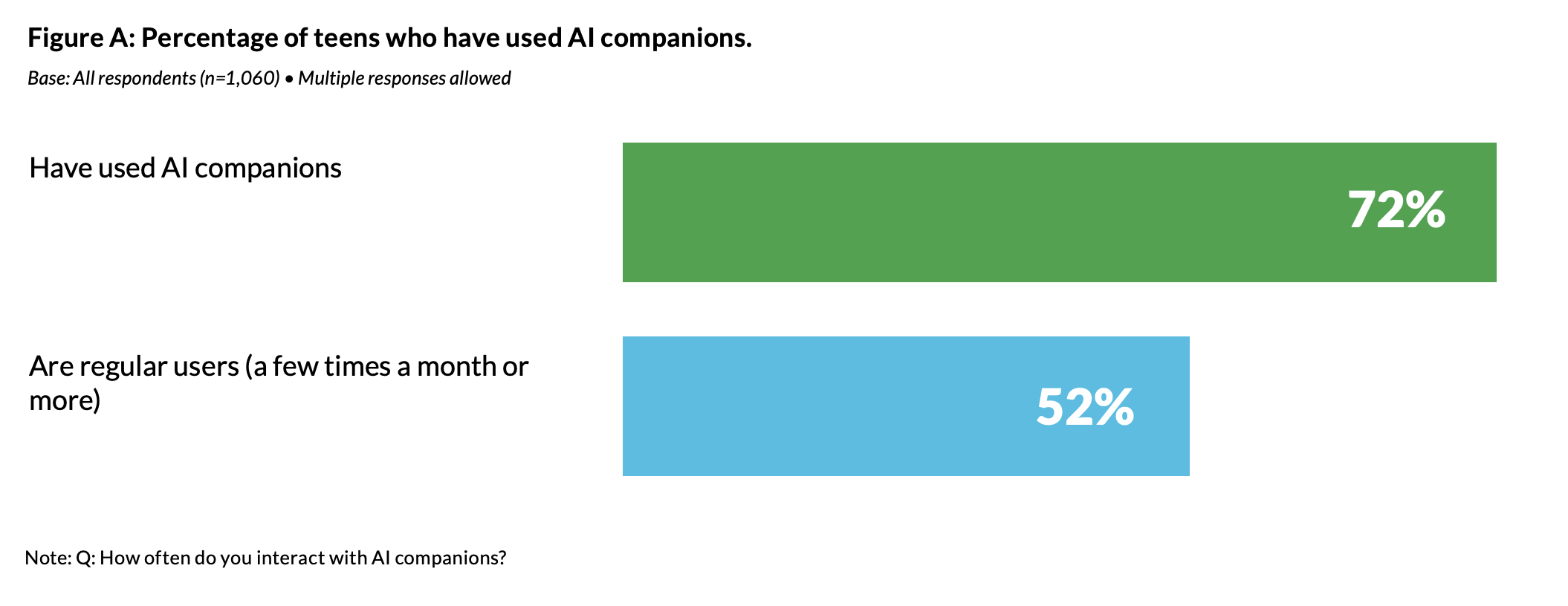

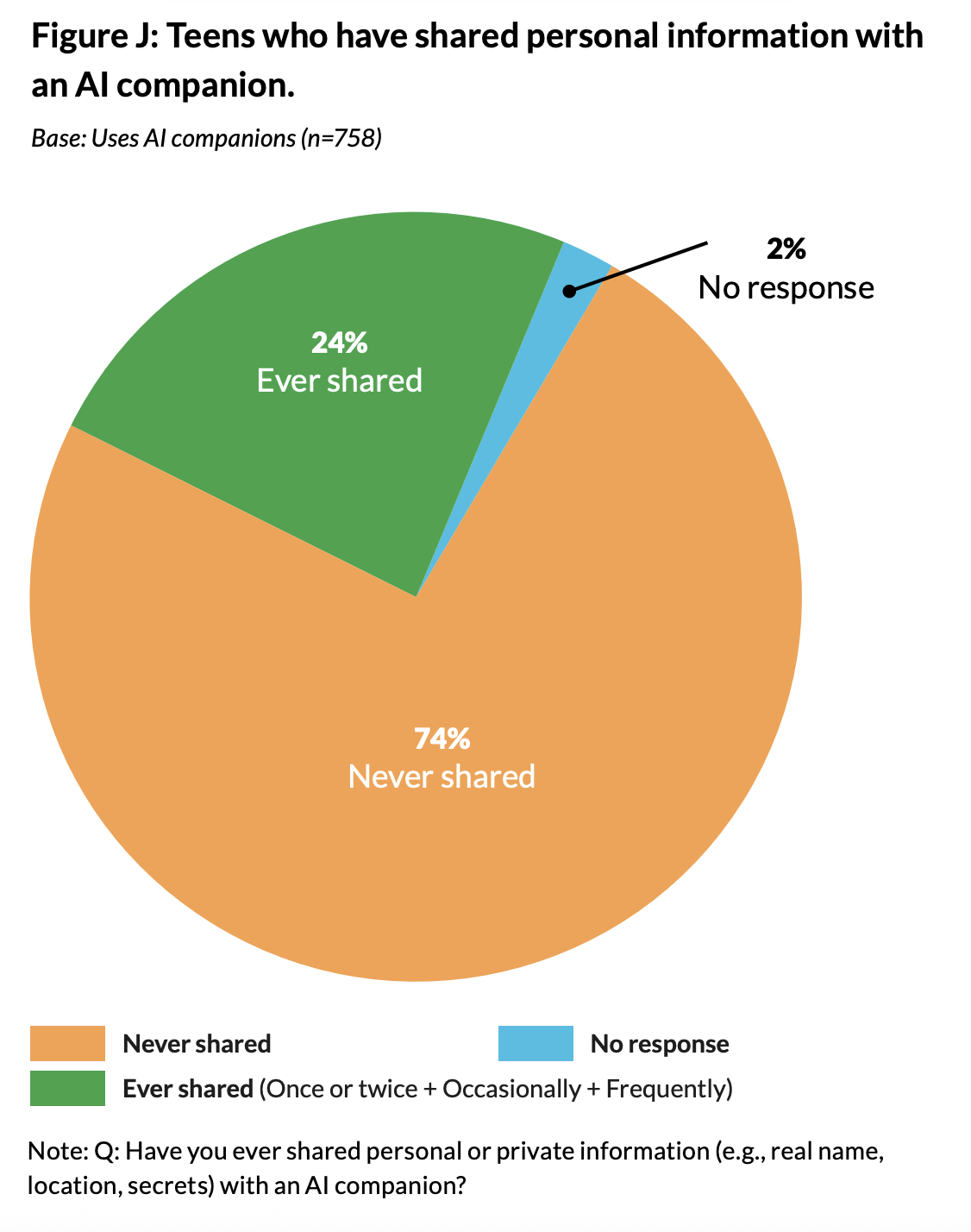

Seventy 2 percent of the 1, 060 teens checked by Common Sense said that they had made use of an AI companion in the past, and 52 % of teens checked are “normal individuals” of AI friends. Nevertheless, essentially, the report found that the majority of teenagers worth human friendships more than AI friends, do not share individual information with AI companions and hold some degree of suspicion towards AI buddies. Thirty nine percent of teenagers surveyed likewise stated that they use skills they exercised with AI buddies, like expressing emotions, apologizing and defending themselves, in the real world.

When comparing Good sense Media’s suggestions for safer AI use to Alongside’s chatbot functions, they do fulfill some of these suggestions– like crisis intervention, usage limits and skill-building aspects. According to Mehta, there is a huge distinction between an AI friend and Alongside’s chatbot. Alongside’s chatbot has built-in security attributes that need a human to examine particular conversations based upon trigger words or worrying expressions. And unlike devices like AI companions, Mehta proceeded, Along with discourages pupil individuals from talking too much.

Among the biggest difficulties that chatbot designers like Alongside face is reducing people-pleasing tendencies, said Friis, a specifying characteristic of AI companions. Guardrails have actually been taken into area by Alongside’s group to stay clear of people-pleasing, which can transform scary. “We aren’t mosting likely to adjust to swear word, we aren’t mosting likely to adjust to bad behaviors,” said Friis. Yet it depends on Alongside’s group to expect and identify which language comes under hazardous categories consisting of when students attempt to use the chatbot for disloyalty.

According to Friis, Along with errs on the side of caution when it concerns establishing what kind of language comprises a concerning declaration. If a conversation is flagged, educators at the companion school are pinged on their phones. In the meanwhile the trainee is prompted by Kiwi to finish a situation assessment and directed to emergency situation service numbers if needed.

Addressing staffing lacks and resource gaps

In institution settings where the ratio of pupils to college therapists is usually impossibly high, Along with acts as a triaging device or intermediary between pupils and their relied on grownups, claimed Friis. As an example, a conversation between Kiwi and a pupil may include back-and-forth troubleshooting concerning producing much healthier sleeping behaviors. The trainee may be triggered to talk to their parents regarding making their space darker or adding in a nightlight for a better rest environment. The pupil might after that come back to their chat after a conversation with their moms and dads and tell Kiwi whether that remedy functioned. If it did, after that the discussion concludes, however if it didn’t then Kiwi can recommend various other potential remedies.

According to Dr. Friis, a number of 5 -min back-and-forth discussions with Kiwi, would translate to days otherwise weeks of conversations with a college counselor who needs to prioritize trainees with the most extreme issues and needs like repeated suspensions, suicidality and dropping out.

Utilizing digital modern technologies to triage health and wellness concerns is not a new idea, stated RAND researcher McBain, and pointed to doctor wait areas that welcome people with a health and wellness screener on an iPad.

“If a chatbot is a slightly extra vibrant interface for collecting that kind of details, then I assume, theoretically, that is not a problem,” McBain continued. The unanswered inquiry is whether or not chatbots like Kiwi carry out much better, also, or even worse than a human would certainly, but the only way to compare the human to the chatbot would be via randomized control trials, stated McBain.

“One of my greatest worries is that companies are entering to try to be the initial of their kind,” claimed McBain, and at the same time are lowering safety and security and high quality standards under which these companies and their scholastic partners distribute confident and eye-catching results from their product, he proceeded.

Yet there’s installing pressure on institution therapists to meet student demands with minimal sources. “It’s truly hard to develop the space that [school counselors] wish to develop. Counselors intend to have those communications. It’s the system that’s making it truly tough to have them,” said Friis.

Alongside uses their institution companions specialist growth and consultation services, as well as quarterly summary reports. A lot of the time these solutions revolve around packaging data for grant propositions or for providing engaging info to superintendents, claimed Friis.

A research-backed technique

On their internet site, Along with promotes research-backed techniques made use of to develop their chatbot, and the company has partnered with Dr. Jessica Schleider at Northwestern College, that studies and creates single-session psychological wellness interventions (SSI)– psychological health and wellness treatments created to resolve and give resolution to psychological health worries without the assumption of any follow-up sessions. A typical therapy treatment is at minimum, 12 weeks long, so single-session treatments were attracting the Alongside group, however “what we know is that no product has ever had the ability to truly successfully do that,” stated Friis.

Nevertheless, Schleider’s Lab for Scalable Mental Wellness has actually published numerous peer-reviewed tests and medical research showing positive outcomes for implementation of SSIs. The Laboratory for Scalable Mental Health and wellness also supplies open resource materials for parents and specialists interested in applying SSIs for teens and young people, and their effort Job YES uses free and confidential on-line SSIs for youth experiencing psychological wellness worries.

“Among my most significant worries is that companies are rushing in to attempt to be the very first of their kind,” claimed McBain, and while doing so are decreasing security and high quality requirements under which these firms and their academic partners circulate confident and distinctive arise from their item, he continued.

What happens to a child’s information when making use of AI for mental wellness treatments?

Along with gathers trainee information from their conversations with the chatbot like state of mind, hours of rest, exercise practices, social practices, on-line communications, among other points. While this information can supply institutions understanding into their trainees’ lives, it does raise concerns concerning student surveillance and information personal privacy.

Together with like numerous other generative AI tools uses other LLM’s APIs– or application shows interface– suggesting they consist of another company’s LLM code, like that used for OpenAI’s ChatGPT, in their chatbot programs which processes chat input and generates conversation output. They additionally have their own in-house LLMs which the Alongside’s AI group has actually created over a number of years.

Growing concerns about exactly how customer data and personal information is saved is specifically relevant when it comes to sensitive pupil data. The Together with team have opted-in to OpenAI’s zero information retention plan, which suggests that none of the pupil data is kept by OpenAI or other LLMs that Alongside makes use of, and none of the information from chats is made use of for training purposes.

Since Alongside operates in colleges across the U.S., they are FERPA and COPPA certified, but the data needs to be kept someplace. So, trainee’s personal recognizing details (PII) is uncoupled from their chat data as that information is stored by Amazon Internet Provider (AWS), a cloud-based sector requirement for private information storage by tech companies around the globe.

Alongside utilizes an encryption procedure that disaggregates the student PII from their conversations. Just when a discussion obtains flagged, and requires to be seen by humans for safety and security reasons, does the trainee PII link back to the conversation concerned. In addition, Alongside is needed by legislation to save pupil conversations and information when it has actually notified a situation, and parents and guardians are free to request that info, claimed Friis.

Normally, adult consent and pupil information policies are done through the college partners, and just like any school services supplied like counseling, there is a parental opt-out alternative which should abide by state and district guidelines on parental permission, said Friis.

Alongside and their institution partners put guardrails in place to make certain that student information is protected and confidential. Nonetheless, data breaches can still take place.

Just How the Alongside LLMs are trained

One of Alongside’s in-house LLMs is utilized to determine potential dilemmas in pupil talks and signal the necessary adults to that situation, said Mehta. This LLM is trained on student and artificial outcomes and key words that the Alongside group goes into manually. And due to the fact that language modifications usually and isn’t always direct or conveniently recognizable, the group keeps an ongoing log of different words and phrases, like the popular acronym “KMS” (shorthand for “eliminate myself”) that they re-train this certain LLM to understand as dilemma driven.

Although according to Mehta, the process of by hand inputting data to train the crisis examining LLM is just one of the most significant efforts that he and his team has to take on, he doesn’t see a future in which this procedure might be automated by one more AI device. “I wouldn’t be comfortable automating something that might set off a dilemma [response],” he claimed– the choice being that the clinical group led by Friis add to this process through a clinical lens.

But with the possibility for fast growth in Alongside’s number of institution partners, these processes will be really hard to keep up with manually, said Robbie Torney, senior supervisor of AI programs at Sound judgment Media. Although Alongside emphasized their procedure of including human input in both their dilemma reaction and LLM advancement, “you can not necessarily scale a system like [this] quickly due to the fact that you’re going to face the need for increasingly more human testimonial,” continued Torney.

Alongside’s 2024 – 25 report tracks conflicts in students’ lives, but doesn’t differentiate whether those conflicts are happening online or in person. Yet according to Friis, it does not really matter where peer-to-peer dispute was taking place. Ultimately, it’s crucial to be person-centered, claimed Dr. Friis, and continue to be focused on what really matters to each individual student. Alongside does offer proactive skill structure lessons on social media safety and digital stewardship.

When it concerns sleep, Kiwi is set to ask students concerning their phone practices “because we know that having your phone in the evening is just one of the important things that’s gon na maintain you up,” stated Dr. Friis.

Universal mental health and wellness screeners readily available

Together with likewise provides an in-app global psychological health screener to school companions. One area in Corsicana, Texas– an old oil community positioned outside of Dallas– found the information from the universal mental wellness screener indispensable. According to Margie Boulware, executive supervisor of special programs for Corsicana Independent College Area, the community has had problems with gun physical violence , but the area really did not have a way of checking their 6, 000 students on the mental health and wellness impacts of distressing events like these until Alongside was introduced.

According to Boulware, 24 % of pupils evaluated in Corsicana, had a relied on grown-up in their life, six percent points less than the standard in Alongside’s 2024 – 25 record. “It’s a little shocking exactly how few children are saying ‘we actually feel connected to a grown-up,'” said Friis. According to study , having a trusted adult helps with youths’s social and psychological health and wellness and health and wellbeing, and can also counter the results of adverse childhood experiences.

In an area where the school district is the greatest employer and where 80 % of trainees are financially deprived, mental wellness resources are bare. Boulware drew a correlation in between the uptick in weapon violence and the high percent of trainees who claimed that they did not have actually a trusted grownup in their home. And although the information provided to the district from Alongside did not straight correlate with the physical violence that the area had actually been experiencing, it was the first time that the district had the ability to take a much more extensive consider trainee mental health and wellness.

So the area developed a task pressure to deal with these problems of enhanced weapon physical violence, and reduced psychological health and belonging. And for the first time, instead of needing to think how many students were having problem with behavior problems, Boulware and the job pressure had representative data to develop off of. And without the universal screening study that Alongside provided, the area would certainly have stayed with their end of year feedback survey– asking questions like “Exactly how was your year?” and “Did you like your teacher?”

Boulware believed that the universal testing study motivated pupils to self-reflect and answer questions more honestly when compared with previous responses studies the district had actually carried out.

According to Boulware, trainee resources and mental health and wellness sources particularly are limited in Corsicana. But the district does have a team of therapists consisting of 16 scholastic therapists and 6 social emotional counselors.

With not enough social emotional therapists to walk around, Boulware stated that a lot of rate one trainees, or students that don’t need normal individually or team scholastic or behavioral treatments, fly under their radar. She saw Alongside as a conveniently available tool for pupils that supplies distinct mentoring on mental wellness, social and behavior concerns. And it additionally uses instructors and administrators like herself a peek behind the drape into pupil mental health and wellness.

Boulware applauded Alongside’s proactive features like gamified skill structure for students that struggle with time monitoring or job company and can gain factors and badges for finishing particular skills lessons.

And Along with fills up an important void for staff in Corsicana ISD. “The amount of hours that our kiddos get on Alongside … are hours that they’re not waiting outside of a trainee support therapist office,” which, because of the low ratio of therapists to trainees, allows for the social psychological therapists to concentrate on trainees experiencing a situation, claimed Boulware. There is “no chance I can have set aside the resources,” that Alongside gives Corsicana, Boulware added.

The Together with app calls for 24/ 7 human tracking by their school partners. This indicates that designated teachers and admin in each district and school are designated to obtain informs all hours of the day, any kind of day of the week including during vacations. This function was a worry for Boulware in the beginning. “If a kiddo’s battling at three o’clock in the morning and I’m asleep, what does that look like?” she said. Boulware and her group had to really hope that an adult sees a crisis alert really rapidly, she proceeded.

This 24/ 7 human tracking system was checked in Corsicana last Christmas break. An alert can be found in and it took Boulware ten minutes to see it on her phone. Already, the trainee had actually already begun servicing an assessment survey prompted by Alongside, the principal who had actually seen the alert prior to Boulware had called her, and she had actually received a sms message from the pupil support council. Boulware had the ability to contact their neighborhood chief of cops and attend to the dilemma unfolding. The pupil was able to connect with a counselor that exact same afternoon.